Table of Contents

For those of you passionate about the inner workings of Windows, this post is your launchpad. We’ll be tracing the history of Windows OS, from its humble beginnings to the powerhouse it is today. This foundational knowledge will serve as a bedrock for the deeper dives into Windows development that follow. Let’s kick off this journey by charting the timeline of Windows releases. We’ll examine key milestones, architectural shifts, and the groundbreaking features that shaped the OS we know and love.

Timeline of Windows Development

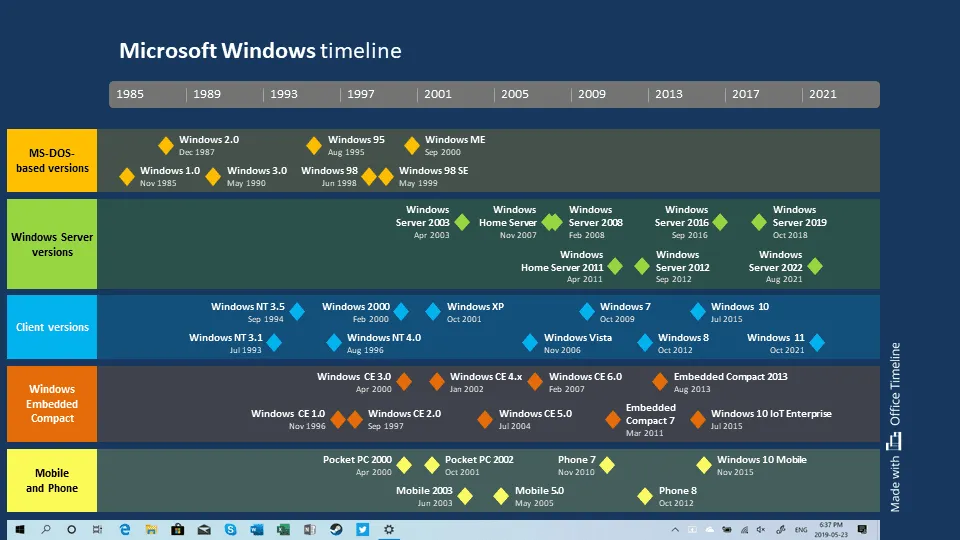

Let’s dive into the visual representation of Windows’ rich history. This timeline offers a bird’s-eye view of the platform’s evolution, focusing on the core product lines that have shaped the desktop and mobile computing landscapes.

Each diamond on this chart marks a significant Windows release, color-coded to distinguish between three primary flavors: DOS-based, NT-based, and CE-based. We’ll unpack what these terms mean shortly.

The saga of Windows begins with its unassuming predecessor, MS-DOS. This 16-bit command-line interface, while basic, was the cornerstone of the early PC era. Its cooperative multitasking and single-user limitations are a stark contrast to today’s OS standards, but it undeniably propelled Microsoft into the spotlight.

DOS-based Windows was essentially a graphical overlay, a first step towards a more intuitive computing experience. However, it was with Windows 95 that the landscape dramatically shifted. A 32-bit, preemptive multitasking OS, it marked a pivotal moment, capturing the hearts (and desktops) of millions. This release solidified Microsoft’s dominance in the consumer OS market, laying the groundwork for the empire we know today.

Its Win32 API was a game-changer, attracting developers and fueling the platform’s growth. However, the underlying DOS architecture proved to be a substantial bottleneck. The lack of security, reliability, and multiprocessor support were glaring omissions in an increasingly complex and demanding computing landscape. It was clear that Microsoft needed a more robust foundation for future growth.

While DOS-based Windows was making strides in the consumer market, engineers at Microsoft were quietly crafting a different beast: Windows NT. Designed with enterprise needs in mind, NT was built from the ground up to be secure, scalable, and reliable. It was a departure from the shaky foundations of DOS, offering a solid platform for servers and workstations alike.

Fast forward to the late 90s, and Microsoft made a bold move: unifying the consumer and enterprise lines under a single OS. This was no small feat. Windows XP, released in 2001, was the culmination of years of development, blending the best of both worlds. It was a hit, capturing the hearts of users everywhere and effectively sounding the death knell for DOS-based Windows.

Meanwhile, Microsoft was exploring new frontiers with Windows CE. This lean, mean operating system was designed to fit into the tight confines of embedded devices. From PDAs to car infotainment systems, CE proved to be incredibly versatile. Its impact is still felt today, especially in the realm of mobile computing. Windows Phone 7, while a valiant effort, was built on the foundations of Windows CE. It was a starting point, but Microsoft’s ambitions extended far beyond a standalone mobile OS.

The next chapter in this saga was a bold attempt at unification. The dream: a single platform where developers could craft apps that seamlessly transitioned from desktops to phones. This would be a game-changer, setting Windows apart from the iOS and Android duopoly. Windows Phone 8, built on the Windows 8 kernel, was the first step in this direction.

The journey towards convergence intensified with the merger of the Windows and Phone divisions. This strategic move brought together the best of both worlds, resulting in Windows 10. Key innovations from Windows Phone, like stringent resource management and unique deployment strategies, were integrated into the core OS.

The culmination of this effort was the Universal Windows Platform (UWP). UWP promised a unified app development experience, allowing developers to target multiple devices with a single codebase. While the vision was ambitious, the reality proved to be more complex.

The quest for a unified Windows platform reached a turning point with Windows 10. By leveraging components from Windows Phone, Microsoft created a versatile OS capable of running on everything from desktops to Xbox consoles and even IoT devices. This marked the end of the road for Windows CE, as its functionality was largely subsumed by the broader capabilities of Windows 10.

While the journey to a single platform wasn’t without its challenges, the success of Windows 10 cemented the dominance of NT-based Windows. With Windows 11 building upon this foundation, the future of the platform looks bright.

In the next part of our deep dive, we’ll peel back the layers of Windows NT, exploring its core architecture and the design principles that have shaped its evolution.

Windows NT

While the original Xbox and Xbox 360 utilized stripped-down NT variants, the Xbox One and Series consoles have embraced a more direct Windows lineage, leveraging the power of Windows 8 and 10. Similarly, HoloLens and HoloLens 2 harness the core of Windows 10 for their immersive experiences. And let’s not forget Azure, Microsoft’s cloud behemoth, which relies on a specialized NT-based host OS to manage virtual machines and storage.

With this foundational understanding, we’re ready to delve into the history of Windows NT itself. Let’s uncover the design principles and architectural decisions that have shaped this cornerstone of the Windows empire.

Windows NT wasn’t born overnight. Its story begins in the early 90s, with a small but incredibly talented team led by the legendary David Cutler. Hailing from Digital Equipment Corporation (DEC), these engineers brought a wealth of experience in building robust, enterprise-grade operating systems.

Released in 1993, NT 3.1 was a groundbreaking achievement. It was a fully 32-bit OS, designed from the ground up for multi-user environments. Features like preemptive multitasking, a sophisticated scheduler, and a secure file system were unheard of at the time.

What does NT in Windows NT stand for?

But what does “NT” actually stand for? The truth is, nobody knows for sure. While “New Technology” seems like the obvious answer, other theories point to Intel’s N10 processor or even a nod to VMS, the OS Cutler worked on at DEC. Some believe it’s a clever acronym derived from VMS itself. If you increment each letter in VMS, you get WNT (Windows NT).

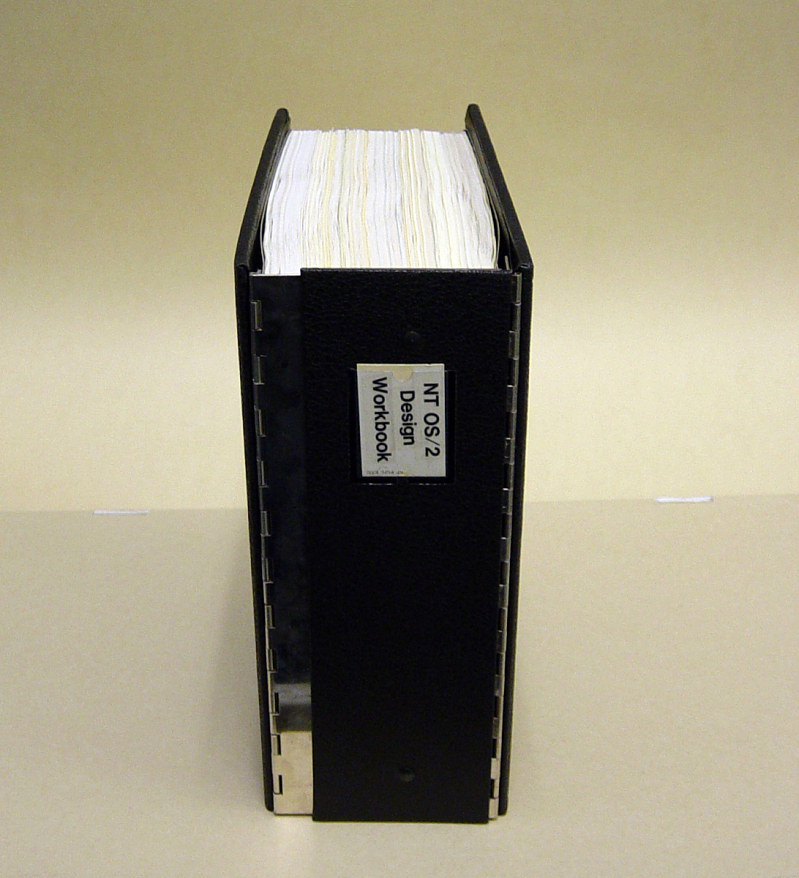

Regardless of its origin, the impact of Windows NT is undeniable. The original NT Design Workbook is now a treasured artifact, housed in the Smithsonian. It’s a tangible reminder of the vision and hard work that went into creating this foundational piece of software.

Windows NT Core Design Principles

At the heart of Windows NT lies a set of core design principles that have shaped its evolution. Let’s dive into the first of these: portability.

Portability

In the early 90s, the hardware landscape was a patchwork quilt of incompatible architectures. NT’s creators understood the need to adapt. Portability wasn’t just a nice-to-have; it was a survival instinct. The ability to swiftly transition to new platforms was crucial for the OS’s success. As we’ll explore, NT’s architecture is a masterclass in portability. Let’s break down the key factors enabling this portability.

At the core is the language choice. While assembly is essential for performance-critical areas like interrupt handling and context switching, the vast majority of Windows is written in C/C++. This high-level approach makes porting to new architectures significantly easier, as much of the codebase can be recompiled with minimal changes.

The structure of Windows itself is another crucial element. Code is meticulously organized, separating architecture-specific components from the core functionality. This modularity is a developer’s dream, simplifying the identification and adaptation of code for new platforms.

Enter the HAL, or Hardware Abstraction Layer. This intermediary shields the upper layers of the OS from the nitty-gritty of hardware differences. By providing a standardized interface, the HAL allows Windows to interact with a variety of CPUs, chipsets, and peripherals without major surgery.

Finally, the ability to load third-party device drivers independently is a testament to Windows’ flexibility. This means the OS can accommodate new hardware without requiring kernel modifications, accelerating the adoption of cutting-edge technologies.

With these building blocks in place, Windows has successfully conquered multiple CPU architectures. Let’s explore this journey in detail.

Starting with the Intel i860, the architecture that inspired NT’s name, we see a rapid expansion. NT 3.1 embraced x86, DEC Alpha, and MIPS, with PowerPC joining the party in NT 3.5. For a brief moment, NT was a true polyglot, capable of running on four distinct CPU architectures.

However, market forces intervened, and by Windows 2000, x86 reigned supreme. The 64-bit era dawned with Itanium, but its potential was eclipsed by x64. The shift to ARM in Windows 8 marked a strategic move towards energy efficiency, initially targeting tablets and phones. ARM64’s arrival in Windows 10 expanded the platform’s reach to high-performance mobile devices.

While Windows 11 remains committed to 64-bit computing, it’s worth noting that the upcoming release will bid farewell to ARM32 applications. Despite this, the legacy of NT’s portability shines through. With eight architectures supported over the years, it’s clear that adaptability has been a core tenet of Windows development.

Extensibility

Windows has long been celebrated for its unmatched extensibility. The ability to tailor the OS to specific needs through drivers and applications has fueled an ecosystem of unprecedented size and diversity. With over 35 million unique applications and 16 million driver combinations, the numbers speak for themselves.

However, this open-door policy hasn’t come without challenges. The permissive nature of early Windows allowed applications and drivers to integrate deeply into the system, often with unintended consequences. Reliability, performance, and security have all suffered due to this unchecked freedom. Kernel-mode drivers, in particular, have been a double-edged sword. While essential for hardware interaction, they’ve also introduced vulnerabilities and compatibility headaches.

Balancing extensibility with stability and security has been a constant challenge. While modern Windows has implemented stricter controls, the legacy of a vast ecosystem means backward compatibility remains a priority. It’s a tightrope walk, but one that’s essential for the platform’s continued success.

Compatibility

Let’s delve deeper into the complexities of compatibility, another critical aspect of Windows’ evolution.

From the outset, compatibility was a cornerstone of Windows NT. In the early 90s, the application landscape was in flux, making it essential for the OS to accommodate various platforms. NT’s response was a flexible architecture centered around subsystems.

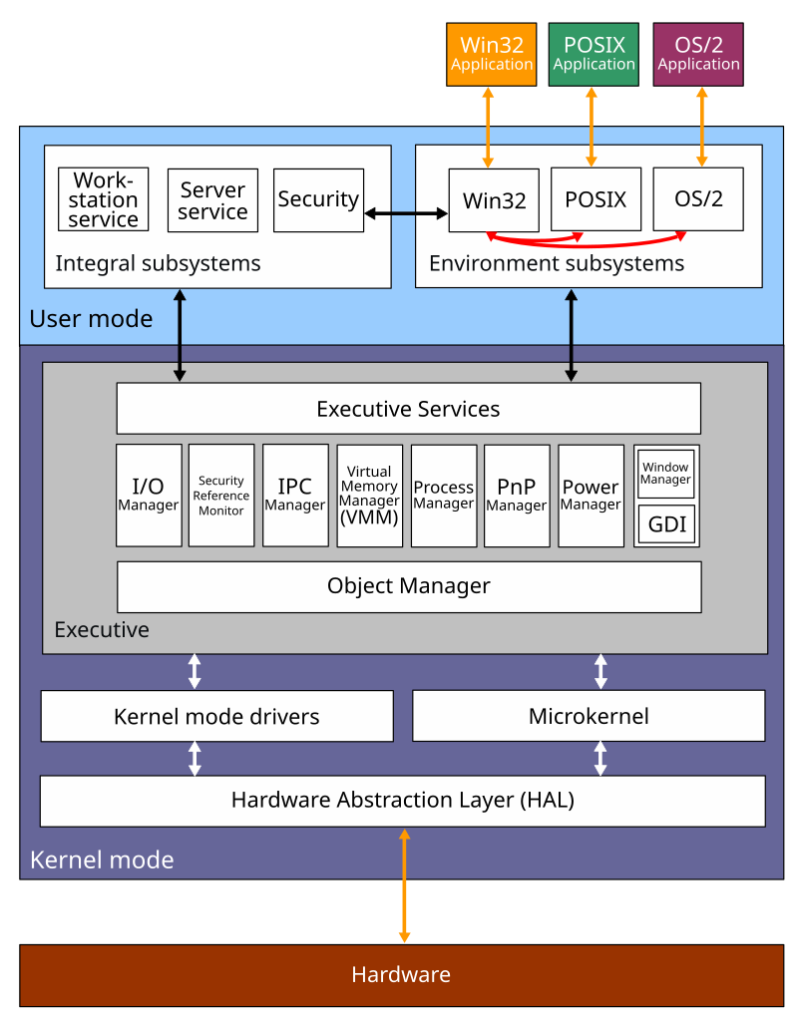

At its core, NT exposed a low-level, undocumented API for internal OS components. For applications, however, it offered multiple “personalities” or subsystems: OS/2, POSIX, and Win32. Initially, OS/2 was the star, a joint venture with IBM positioned as the primary API. In fact, early versions of NT were even called “NT OS/2.” The tides turned as Win32, riding the wave of Windows 3.x’s popularity, became the dominant API. While OS/2 support persisted, Win32 took center stage. POSIX, designed for Unix-like compatibility, also found a place within NT.

To truly appreciate the complexity of Windows NT, let’s quickly take a look at the Windows NT architecture.

At the base, we have the kernel, home to drivers and the HAL. Above this lies user mode, where applications and subsystems reside. Subsystems, such as Win32, OS/2, and POSIX, act as intermediaries, translating application calls into system-level operations. Most modern applications interact with the Win32 API, but some may directly access the underlying NT API.

Enter NTVDM, a virtual machine that runs within Windows, allowing 16-bit applications to coexist with modern software. It’s a testament to Windows’ commitment to backward compatibility that you can still run a decades-old DOS app on a cutting-edge machine.

Fast forward to the present, and we have WSL and WSA, sophisticated methods of running Linux and Android applications respectively. WSL 1 employed a clever virtualization technique, mapping Linux system calls to NT functions. WSL 2 and WSA, on the other hand, leverage full virtualization, providing better performance and compatibility at the cost of increased resource consumption.

Another compatibility marvel is WOW64, which enables 32-bit applications to run on 64-bit Windows. This layer translates system calls and manages address spaces, ensuring seamless operation. ARM64 Windows goes even further, with instruction emulation allowing it to run both x86 and x64 apps.

This intricate tapestry of compatibility mechanisms is a testament to Windows’ engineering prowess. The ability to run software spanning decades and multiple architectures is a feat unmatched by many other operating systems. It’s a cornerstone of Windows’ enduring success.

Deprecations over time

While supporting a multitude of subsystems and virtualization layers is a testament to Windows’ flexibility, it’s not without its costs. Over time, certain compatibility features have been retired as they became less relevant or were superseded by newer technologies.

The OS/2 subsystem, once a cornerstone, was phased out following IBM’s decision to discontinue OS/2 development. POSIX compatibility also met a similar fate with Windows 7. The shift towards 64-bit computing in Windows 11 marked the end of 16-bit application support, as modern CPUs lack the necessary hardware support. Similarly, the upcoming deprecation of ARM32 reflects the evolution of hardware capabilities.

Beyond these high-level changes, maintaining compatibility with existing applications and drivers requires constant attention. Even minor OS modifications can break older software due to unexpected dependencies or latent bugs. To address this, Windows employs a sophisticated shimming mechanism. Shims are essentially workarounds that allow applications to function correctly despite changes in the underlying OS.

While compatibility has been a cornerstone of Windows’ success, it’s essential to balance it with the need for innovation and efficiency. By carefully managing compatibility features and leveraging techniques like shimming, Windows strives to maintain a delicate equilibrium between preserving the past and embracing the future.

Security

Security has always been paramount in operating system design, and Windows NT was no exception. From its inception, NT aimed to meet the stringent security standards set forth by the Department of Defense’s Orange Book C2 criteria. This included foundational elements like secure logon, discretionary access control, security auditing, and object reuse protection.

Secure logon ensured that users were properly authenticated before gaining system access. Discretionary Access Control (DAC) granted granular control over system resources, allowing owners to specify who could perform specific actions. Security auditing provided a detailed log of system events, aiding in incident response. Finally, object reuse protection guaranteed that sensitive data was erased from system memory before it was reallocated, preventing unauthorized access.

Building on this solid foundation, Windows later achieved certification under the Common Criteria, an international standard for IT security evaluation. These certifications solidified Windows’ reputation as a secure platform for government and enterprise environments.

However, the early 2000s brought a rude awakening. A barrage of high-profile malware attacks, including Code Red, Nimda, Slammer, and Blaster, wreaked havoc on Windows systems worldwide. Unlike today’s cyber threats, which are often financially motivated, these early attacks were primarily driven by a desire to cause disruption. The impact on both users and Microsoft’s reputation was immense.

Bill Gates’ Trustworthy Computing memo

The wake-up call came in the form of a digital plague. The devastating impact of the early 2000s malware outbreaks forced a seismic shift in Microsoft’s priorities. Bill Gates’ Trustworthy Computing memo served as a rallying cry, demanding a relentless focus on security above all else.

Engineers were diverted from feature development to a massive code audit, a herculean effort that delayed the release of Windows Vista but laid the groundwork for a more secure future. While hotfixes and service packs addressed immediate threats, the real transformation lay in a fundamental change of mindset.

Security was no longer an afterthought but an integral part of the development lifecycle. Dedicated security teams were established to enforce rigorous processes, from threat modeling and static analysis to penetration testing and red teaming. The concept of “security by design” became a mantra, with developers empowered to build security into their code from the ground up.

To fortify the OS, Windows underwent a series of architectural changes. Attack surface reduction became a core principle, with services isolated into individual processes and unnecessary privileges revoked. The introduction of mandatory integrity levels and app containers created a more secure execution environment. Windows Defender, a built-in antivirus solution, was a game-changer in protecting end users.

Virtualization technology provided another layer of defense. By isolating critical components within virtualized environments, Windows could mitigate the impact of attacks and protect sensitive data. Features like Windows Sandbox and Application Guard exemplify this approach.

While the journey is far from over, the progress made in Windows security is undeniable. The OS has evolved from a frequent target to a more resilient platform. However, the threat landscape continues to evolve, demanding ongoing vigilance and innovation.

Conclusion

Windows has come a long way since its inception, evolving from a simple graphical interface to a complex, multifaceted operating system. Its success can be attributed to a combination of factors: a relentless pursuit of compatibility, a steadfast commitment to security, and a constant drive for innovation.

While we’ve covered a lot of ground in this post, it’s important to remember that this is just the tip of the iceberg. The world of Windows is vast and complex, filled with countless intricacies and fascinating stories. We hope this exploration has ignited your curiosity and provided a solid foundation for deeper dives into the inner workings of this iconic operating system.

As we look to the future, it’s clear that Windows will continue to evolve, adapting to new challenges and opportunities. With a strong heritage of innovation and a commitment to its users, Windows is poised to remain a dominant force in the computing landscape for years to come.

Continue Reading

- Salesforce Solutions: Mastering Common Scenarios with Ease

- WebAuthn FAQs – Part 13

- WebAuthn FAQs – Part 12

- WebAuthn FAQs – Part 11

- WebAuthn FAQs – Part 10